Having extensive experience in the Lifesciences industry, I am used to having a keen focus on quality. On occasions, quality was blamed for delays or for projects taking “too long”. This was particularly the case with regulatory systems where computer system validation was a requirement. Sometimes these were excuses. But it was also the case that unnecessary overhead was generated, i.e. work or documentation that did not help or positively impact the desired outcome and, therefore, strictly speaking, not necessary.

A project is launched to achieve a business outcome with its associated requirements. Within this journey, there are many things that can go wrong. I like referring to the work of Amy Edmondson (Harvard Business School) where she refers to three types of failures: 1) the (potentially) intelligent ones, i.e., the answer cannot be known without experimenting; 2) the basic ones that we wish we’d never experience and 3) the preventable complex system failures. These are situations where we have good processes and protocols, but a combination of internal and external factors come together to produce a failure outcome.

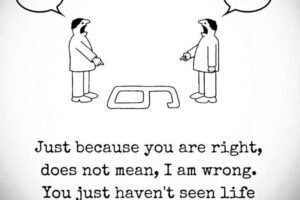

A quality management system needs to allow for experimentation but segregate these from other types of activities. It can then guide the organization to avoid making basic failures. The need to detect preventable complex system failures requires a degree of sophistication and ability to analyze symptoms or issues, in a way that they can be fully explored. These types of underlying issues are often detected by team members who are lower in the organization. Creating an environment where everyone can be heard, where there is sufficient psychological safety for them to speak, is critical.

Balancing quality, cost and time will always be dynamic tension. Acknowledging the tension and actively manage it, will help drive success.